Note: This is not the current version of the report!

Skinive improved the accuracy of the neural network in 2021 and published the data. The latest version of the report is available at the link – https://skinive.com/skinive-accuracy2021/

Table of contents

– ABSTRACT

1. INTRODUCTION

2. SKINIVE ALGORITHM TO ANALYZE THE SKIN LESION IMAGES

2.1 Nosologies & Classes

2.2 Neural network architecture

2.3 Data security

3. MATERIALS AND RESULTS

– CONCLUSION

– REFERENCE

– AI EXPERT REVIEW

– MEDICAL EXPERT REVIEW

Analysis of Skinive algorithm’s accuracy for risk assessment of skin conditions, based on machine learning algorithms.

Authors: K.Atstarov, A.Lian, V.Shpudeiko, A.Ahushevich, I.Lichko

Abstract

Background

Machine learning algorithms for medical imaging processing are now achieving expert accuracy and are being actively introduced into medical practice. However, there is no objective

assessment of the use of machine learning to classify skin lesions in a number of smartphone applications. The lack of objective methodologies and open data sets for evaluation of these

algorithms (as in case of e.g. Imagenet for general object recognition in images) hinders objective assessment by specialists and impedes the widespread use of this technology in public

health.

Objective

In this study, we experimentally evaluate the accuracy of Skinive algorithms and compare them with the previously published study on skin cancer risk assessment(1*).

Methods

This publication presents in detail the results of our smartphone application system. Skinive uses a machine-learning algorithm to calculate the skin pathologies’ risk rating. The algorithm

is trained on 63,955 images. All the images in the dataset have been assessed by dermatologists for risk.

To evaluate the sensitivity of the algorithm, 3 validation data sets are used:

- (Pre) malignant – 285 cases of skin cancer and pre-cancerous conditions;

- HPV – 285 cases of Human Papilloma Virus;

- Acne – 285 cases of acne, milia, rosacea.

We calculate specificity on a separate set containing 6000 benign cases.

Results

To simulate an experiment, the authors prepared validation datasets with a similar distribution of the number of images by nosology and used the Skinive neural network to analyze the images and classify the levels of risk, similar to the example below:

Level of sensitivity: 89,1% – neoplasms, 79,6% – HPV, 86,3% – Acne

Specificity: 95,3%

|

Risk Assessment Results | ||||

| Skin cancer type | Total cases | Low risk | High risk | Sensitivity* |

| (Pre) malignant case* | 285 | 31 | 254 | 89,1% |

| Acne | 285 | 39 | 246 | 86,3% |

| HPV | 285 | 58 | 227 | 79,6% |

| Low risk (Benign) | low risk | high risk | Specificity** | |

| Benign cases | 6000 | 5,607 | 393 | 93.5% |

* Sensitivity is defined as the ratio of the number of cases of skin pathology correctly determined by the algorithm (precancerous diseases and malignant tumors, acne and HPV) to the number of all clinically confirmed cases, respectively.

** Specificity is equal to the number of benign cases correctly classified by the algorithm as low risk (true negative cases), divided by the total number of all clinically confirmed benign cases.

The results obtained above followed closely the experimental setup proposed in 1 * in terms of relevant class distributions and a total number of cases.

Conclusions

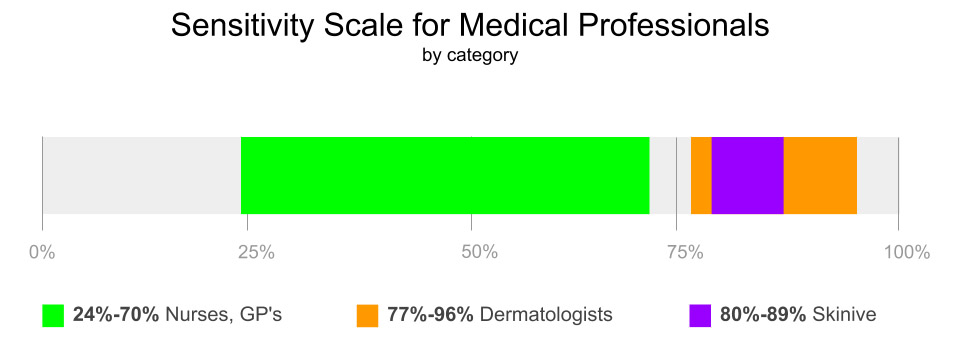

The results of the accuracy of the neural network are comparable with the accuracy of dermatologists obtained in studies (5 *, 6 *) and can be considered as an expert system for supporting the adoption of a medical decision.

The results of the comparative analysis cannot be interpreted unambiguously and can not be fully reliable, as in the datasets were used data from different sources. The lack of open data (photos) and common approach to validating decisions from different manufacturers do not allow independent benchmarking, needed to confirm the effectiveness of the method in general and objective comparison of existing solutions. Nevertheless, the results obtained above are on par with medical professionals and can be further improved with additional data and more optimized algorithms.

The lack of open validation datasets (images) and a common approach of different developers to decision validating do not allow them to conduct independent benchmarking, which is necessary to confirm the effectiveness of the method in general and objective comparison of existing solutions.

Future research is needed to define the role and assess the impact of mobile applications on the health system and its users and to further discuss the implementation of common methodologies to assess the effectiveness of mobile applications for assessing the risk of skin diseases.

P.S.

We are open to collaborative research with other datasientist teams and can provide access to our validated dataset upon request.

We are ready to provide a full version of our study for the following purposes:

- publications in medical journals and print media;

- reviews by medical and technical experts;

- partners, corporate clients, investors (on NDA terms until the moment of official publication in open sources).

Сontact us in a convenient way

Note: This is not the current version of the report!

Skinive improved the accuracy of the neural network in 2021 and published the data. The latest version of the report is available at the link – https://skinive.com/skinive-accuracy2021/

Reviews

Vladimir Nedovic

Technical Director at Rockstart AI Accelerator

15 years of experience with automated image analysis and data science

Research work described in this article was conducted by the authors during the Rockstart AI acceleration program in ‘s Hertogenbosch, the Netherlands . The purpose of the study was threefold:

- Identify a representative and balanced set of images that can be made available to all researchers utilizing computer vision and machine learning for classification of skin lesions.

- Create a benchmark for evaluation of all similar methods in this field.

- Compare Skinive results to state of the art in the field on the basis of the defined benchmark and data; put those results in the context of medical professionals’ performance.

As part of the acceleration program, I have had weekly sessions with the Skinive technical team, overseeing the experimental setup, dataset composition, class distribution and other relevant aspects in creation of such a benchmark. To the best of my knowledge, the benchmark satisfies all the necessary criteria and is thus a good candidate for a golden standard for other researchers in the field in testing the performance of their algorithms.

In addition, the authors and I rigorously inspected results of all the experiments, including those shown in this report. The authors went to great lengths to ensure that these results are not only optimal for their use-case, but also comparable to state-of-the-art in the field as well as human medical experts.

Alexandra Aniskevich

Assistant of the Department of Skin and Sexually Transmitted Diseases at the Belarusian State Medical University,

Work experience: since 2009

On the article “Analysis of Skinive algorithm’s accuracy for risk assessment of skin conditions, based on machine learning algorithms.” (Authors: K.Atstarov, A.Lian, V.Shpudeiko, A.Ahushevich, I.Lichko).

This article is devoted to the urgent problem of dermatovenerology – the study of the prospects for the use in medicine of machine learning algorithms for processing medical images, which will improve the early diagnosis of skin oncopathologies. The aim of the study was to study the diagnostic accuracy of the Skinive mobile application and to compare the results with the previously published work of Skinvision B.V.

As a result of the studies, the authors established the sensitivity (79,6%-89.1%) and specificity (93.5%) of the Skinive mobile application, which indicates a high level of the diagnostic method, but there is a need to improve the sensitivity level for detecting skin cancer.

Conclusion: This article was written at a high scientific level. The structure of the article consistently reflects the logic of the study. It should be noted that the article is written in clear language, not overloaded with highly specialized terminology. The findings of the authors are well-founded. The results of the work may be useful to oncodermatologists, dermatovenerologists, as well as general practitioners.

Reference:

1. Accuracy of a smartphone application for triage of skin lesions based on machine learning algorithms

A. Udrea, G.D. Mitra, D. Costea, E.C. Noels, M. Wakkee, D.M. Siegel, JEADV; accepted for publication. T.M. de Carvalho, T.E.C. Nijsten. Published on September 08, 2019.

https://onlinelibrary.wiley.com/doi/10.1111/jdv.15935

2. Where machines could replace humans—and where they can’t (yet)

Michael Chui, James Manyika, and Mehdi Miremadi

https://www.mckinsey.com/business-functions/digital-mckinsey/our-insights/where-machines-could-replace-humans-and-where-they-cant-yet

3. The practice of radiology needs to change

Giles Maskell. Published on June 19, 2017

http://blogs.bmj.com/bmj/2017/06/19/giles-maskell-the-practice-of-radiology-needs-to-change/

4. Using Deep Learning to Inform Differential Diagnoses of Skin Diseases

Yuan Liu, PhD, Software Engineer and Peggy Bui, MD, Google Health. Published on September 12, 2019

https://ai.googleblog.com/2019/09/using-deep-learning-to-inform.html

5. Assessing diagnostic skill in dermatology: a comparison between general practitioners and dermatologists.

Tran H1, Chen K, Lim AC, Jabbour J, Shumack S. Published in November, 2005

https://www.ncbi.nlm.nih.gov/pubmed/16197420

6. Comparison of dermatologic diagnoses by primary care practitioners and dermatologists. A review of the literature.

Federman DG1, Concato J, Kirsner RS. Published in April, 1999

https://www.ncbi.nlm.nih.gov/pubmed/10101989

7. The 2019 novel coronavirus disease (COVID-19) pandemic: A review of the current evidence.

Chatterjee P, Nagi N, Agarwal A, Das B, Banerjee S, Sarkar S, Gupta N,

Gangakhedkar RR. Published on March 30, 2020

https://www.ncbi.nlm.nih.gov/pubmed/32242874

8. Accuracy classification score.

scikit-learn developers (BSD License). Published in October, 2019

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.accuracy_score.html

9. CrossEntropyLoss

Torch Contributors

https://pytorch.org/docs/stable/nn.html#crossentropyloss

10. BCELoss

Torch Contributors

https://pytorch.org/docs/stable/nn.html#torch.nn.BCELoss

11. AWS GDPR Data Processing Addendum – Now Part of Service Terms

Chad Woolf. Published on May 22, 2018

https://aws.amazon.com/blogs/security/aws-gdpr-data-processing-addendum/

12. Navigating GDPR Compliance on AWS

Amazon Web Services, Inc. or its affiliates. Published in October, 2019

https://d1.awsstatic.com/whitepapers/compliance/GDPR_Compliance_on_AWS.pdf